Chat with Your Documents: How RAG Connects LLMs to Your Private Data

Large Language Models (LLMs) like GPT and Claude are incredibly powerful. They’re trained on vast volumes of internet data, allowing them to answer questions, translate languages, and even write code. But they have one fundamental limitation: they don’t know your data.

An LLM has no idea what’s in your company’s internal wiki, your latest project’s technical documentation, or the 50-page PDF you just saved. Its knowledge is frozen in time, based on the data it was trained on.

So, how do we bridge this “knowledge gap”? The traditional answer was to “fine-tune” or “retrain” the model, an expensive and complex process. But today, there’s a much more cost-effective and powerful approach: Retrieval-Augmented Generation (RAG).

What is RAG (and Why Do You Need It)?

RAG is the process of optimizing an LLM’s output by first referencing an authoritative knowledge base outside its training data. In simple terms, RAG gives the LLM a “cheat sheet” just before it answers your question. Instead of asking the model, “What is our company’s new product policy?” (a question it can’t answer), you first find the relevant policy document, hand it to the model, and then ask, “Using this document, what is our new product policy?”

This approach is transformative because it:

- Makes LLMs relevant: It connects them to specific domains and an organization’s internal knowledge.

- Keeps information current: You can add new documents to your knowledge base at any time.

- Reduces “hallucinations”: By forcing the LLM to base its answer on a provided text, you dramatically increase accuracy and trustworthiness.

- Is cost-effective: It’s far cheaper than retraining a massive model every time your data changes.

How to Build a RAG Pipeline: A Practical Example ✨

RAG isn’t a single product but an architectural pattern. To make it concrete, let’s look at the high-level workflow of a demo project, RAGapp on GitHub, which lets you chat with your PDFs.

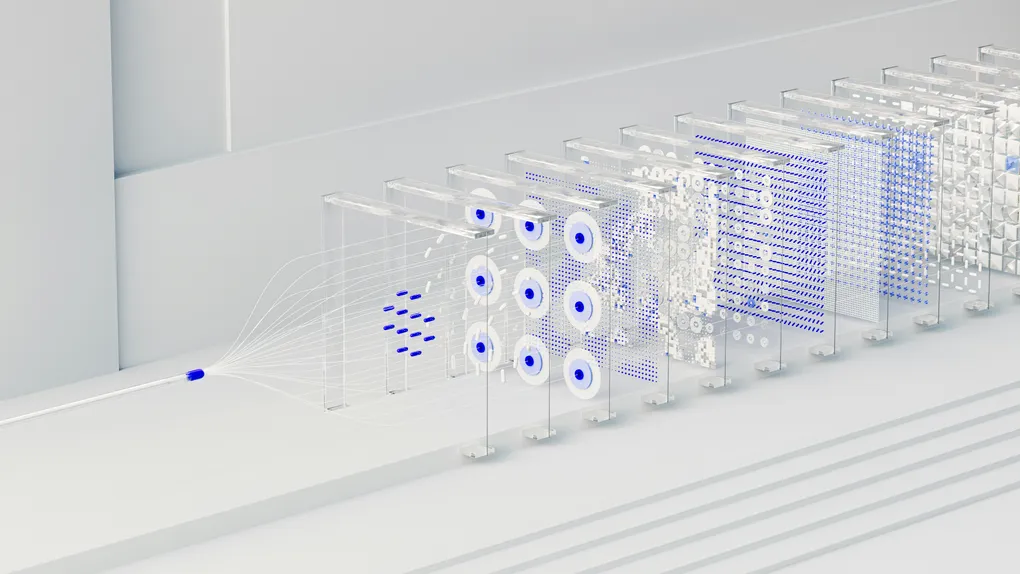

This process is broken into two main phases: Ingestion (building the “memory”) and Querying (asking a question).

Phase 1: The Ingestion Pipeline (Indexing Your Knowledge)

Before you can ask questions, you must build your external knowledge base. In the RAGapp project, this is an automated background process.

- Load and Chunk: The process starts when a user uploads a PDF. You can’t feed a 100-page document to an LLM at once. The system first reads the PDF’s text (using a tool like Llama-Index) and breaks it into smaller, manageable chunks (e.g., 1000-character paragraphs).

def load_and_chunk_pdf(path: str):

docs = PDFReader().load_data(file=path)

texts = [d.text for d in docs if getattr(d, "text", None)]

chunks = []

for t in texts:

chunks.extend(splitter.split_text(t))

return chunks- Embed: Each chunk of text is then converted into a numerical “fingerprint” called an embedding. This is done by a special AI model. The RAGapp project uses Amazon Bedrock’s amazon.titan-embed-text-v2:0 model, which turns each chunk into a list of 1024 numbers.

def embed_texts(texts: list[str]) -> list[list[float]]:

"""Embed a list of texts using Bedrock's embedding model.

This function calls Bedrock one text at a time and returns a list of vectors.

"""

all_embeddings = []

for text in texts:

body = json.dumps({"inputText": text})

response = bedrock_client.invoke_model(

body=body,

modelId=EMBED_MODEL,

accept="application/json",

contentType="application/json"

)

response_body = json.loads(response.get("body").read())

embedding = response_body.get("embedding")

all_embeddings.append(embedding)

return all_embeddings- Store: These numerical embeddings, along with the original text, are stored in a specialized vector database. RAGapp uses Qdrant for this. A vector database is like a library that organizes books by their meaning and concepts, not just their titles.

Phase 2: The Query Pipeline (Finding the Answer)

This is where the RAG magic happens. When a user asks a question, the system uses the knowledge base it just built.

- Embed the Query: First, the user’s question is converted into an embedding using the exact same model (Titan v2). This gives us a numerical “fingerprint” of the question’s meaning.

- Retrieve (The “R”): The system searches the Qdrant vector database to find the text chunks whose embeddings are most “similar” to the question’s embedding. This is the Retrieval step. It effectively finds the most relevant paragraphs from all the uploaded PDFs.

def _search(question: str, top_k: int = 5):

query_vec = embed_texts([question])[0]

store = QdrantStorage()

found = store.search(query_vec, top_k)

return RAGSearchResult(contexts=found["contexts"], sources=found["sources"])- Augment & Generate (The “AG”): The system then assembles a new prompt. This prompt contains:

- The most relevant text chunks (the “context” or “cheat sheet”).

- A system instruction (e.g., “You answer questions using only the provided context.”).

- The original user question.

This complete prompt is sent to a powerful LLM (like anthropic.claude-3-haiku via Amazon Bedrock). The LLM then Generates an answer based only on the context you provided.

def _invoke_llm(context_block: str, question: str) -> dict:

user_content = (

"Use the following context to answer the question. \n\n"

f"Context:\n{context_block}\n\n"

f"Question: {question}\n"

"Answer concisely using the context above."

)

body = {

"anthropic_version": "bedrock-2023-05-31",

"max_tokens": 1024,

"temperature": 0.2,

"system": "You answer questions using only the provided context.",

"messages": [{"role": "user", "content": user_content}]

}

response = bedrock_client.invoke_model(

modelId="anthropic.claude-3-haiku-20240307-v1:0",

body=json.dumps(body),

accept="application/json",

contentType="application/json",

)

return json.loads(response.get("body").read())

context_block = "\n\n".join(f"- {c}" for c in found.contexts)The Tools That Make It Work 🧩

The RAGapp project provides a great snapshot of a modern RAG stack:

- Interface: Streamlit provides a fast, simple web UI for uploading files and chatting.

- Orchestration: Inngest and FastAPI manage the background tasks, ensuring the ingestion and query pipelines run reliably.

- Data Processing: Llama-Index provides the tools for reading PDFs and splitting them into chunks.

- Vector Database: Qdrant acts as the scalable, searchable “memory” for the document vectors.

- AI Models: Amazon Bedrock provides managed access to the embedding models (Titan) and the LLMs (Claude) needed to power the whole system.

Conclusion

Retrieval-Augmented Generation is one of the most practical and impactful advancements in the AI space. It’s the key to unlocking an LLM’s potential by securely connecting it to your own domain-specific, private, and up-to-date information. As projects like RAGapp demonstrate, the tools to build your own RAG pipeline are more accessible than ever, allowing you to move beyond general-purpose chatbots and create truly personalized, knowledgeable AI assistants.