Unlocking Vision: A Beginner's Guide to How Computers 'See'.

Have you ever wondered how your phone can recognize faces in photos or how a self-driving car can identify a stop sign? The magic behind this technology is computer vision, and its powerhouse is a special kind of deep learning model called a Convolutional Neural Network (CNN).

In this guide, we’ll demystify CNNs and explore how they learn to classify images and detect objects, turning pixels into meaningful predictions.

#It All Starts with Data: What is an Image?

To a computer, an image isn’t a cat or a car—it’s just a grid of numbers. This grid has three dimensions:

- Height: The number of pixels from top to bottom.

- Width: The number of pixels from left to right.

- Color Channels: The depth of the image, representing its color composition.

A standard color image uses three channels: Red, Green, and Blue (RGB). Each pixel is a combination of these three values (each from 0 to 255). A grayscale image, on the other hand, has only one channel, with each pixel represented by a single value for its intensity.

#The Core Idea: Thinking Locally, Not Globally

So, how does a neural network learn from this grid of numbers? In previous models, you might have used dense layers, where every neuron is connected to every single pixel from the input image. This “global” approach forces the network to learn patterns based on their exact location.

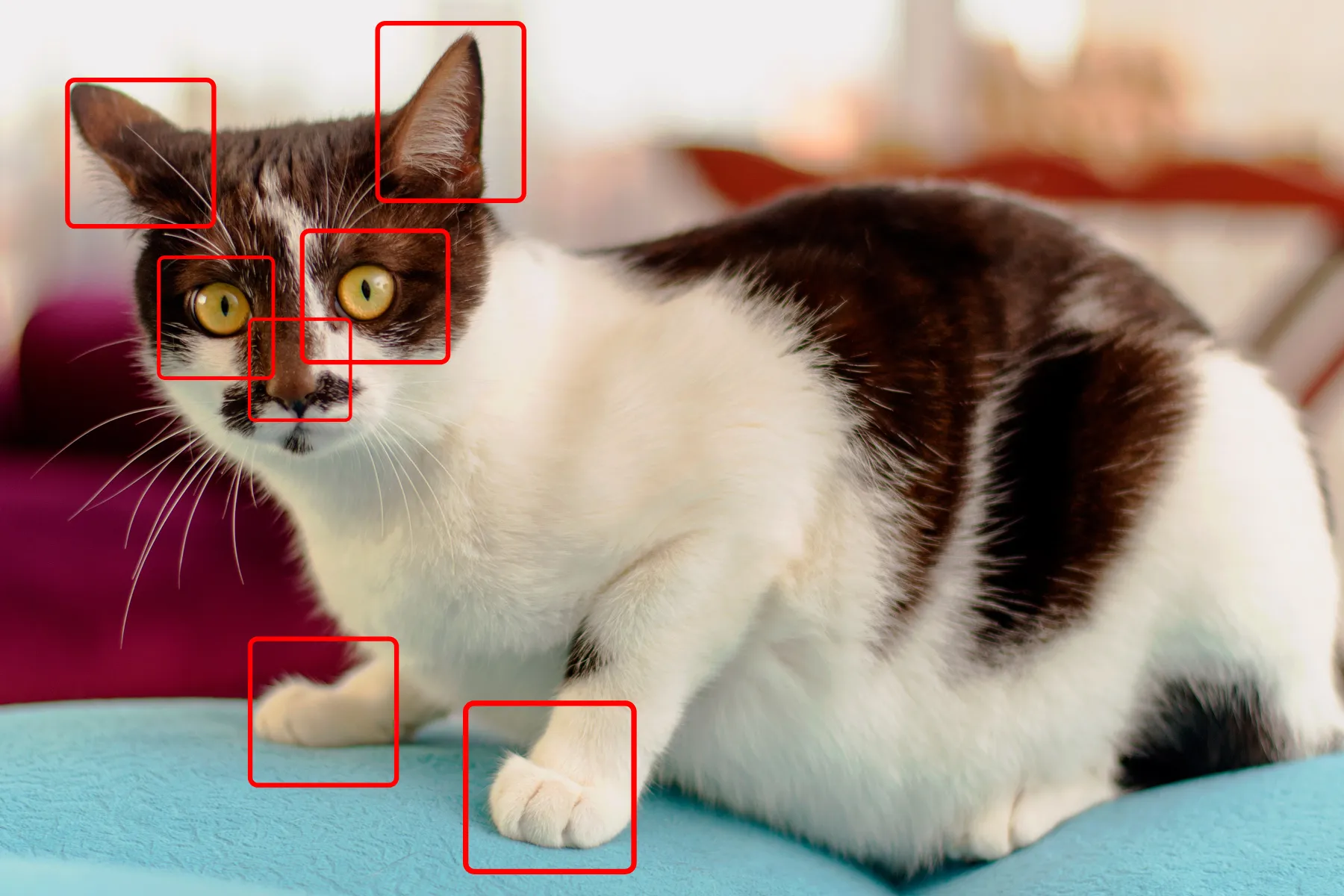

This creates a problem. If a dense network learns to recognize a cat’s eye in the top-left corner of an image, it won’t recognize that same eye if it appears in the bottom-right. It would have to learn the pattern all over again for the new location.

This is where convolutional layers change the game. Instead of looking at the entire image at once, a convolutional layer scans the image in small, localized chunks. It learns to recognize a pattern—like an eye, a whisker, or a wheel—and can then identify that pattern anywhere it appears in the image. This ability to detect patterns regardless of their position is the secret sauce of CNNs.

#The Anatomy of a CNN: Filters, Feature Maps, and Pooling

A CNN is built from a stack of specialized layers that work together to break down an image and understand its contents.

»The Convolutional Layer: The Feature Detector

The main job of a convolutional layer is to find patterns. It does this by sliding small “windows” called filters (or kernels) across the image. A filter is just a small matrix of values representing a specific pattern, like an edge, a corner, or a patch of color.

As the filter slides over the image, it creates a feature map (or response map). This map highlights the areas where the filter’s specific pattern was detected. A single convolutional layer can run dozens of different filters simultaneously, each one searching for a different pattern and creating its own feature map.

You can also tune how the filter moves with two key parameters:

- Padding: This adds a border of pixels around the image, ensuring the filter can process the edges properly and that the feature map stays the same size as the original input.

- Strides: This defines the step size of the filter as it moves across the image. A stride of 1 moves one pixel at a time, while a stride of 2 skips every other pixel.

»The Pooling Layer: The Shrinking Machine

After detecting features, a CNN needs a way to summarize them. That’s the job of a pooling layer. Its goal is to reduce the size of the feature maps, making the network faster and more efficient.

The most common method, Max Pooling, works by sliding a small window (usually 2x2) over the feature map and taking only the maximum value from that window. This process effectively shrinks the feature map while retaining the most important information—the strongest presence of a detected feature. Think of it like squinting at an image to get the general gist without getting lost in the tiny details.

#Putting It All Together: The CNN Architecture

A complete CNN isn’t just one layer; it’s a carefully constructed sequence of them. A typical architecture looks something like this:

CONV -> POOL -> CONV -> POOL -> DENSE -> OUTPUT

This structure creates a powerful hierarchy of feature detection.

- The first few layers act like detail detectors, learning to recognize simple patterns like edges, lines, and colors.

- The middle layers take these simple patterns as input and learn to combine them into more complex shapes and textures, like eyes, noses, or feathers.

- The final layers, usually dense ones, take these complex shapes and learn to classify them into high-level concepts, like a “dog,” a “car,” or a “bird.”

By starting small and building up in complexity, a CNN can learn to recognize almost any object, making it the go-to tool for nearly every modern computer vision task. ✨